Optimization algorithm¶

Until the previous section, we applied structural optimization to various examples. In this section, we will learn about the local optimization algorithms used in structural optimization.

Algorithm type¶

As local optimization algorithms, ASE offers the FIRE method, which performs optimization with Molecular Dynamics (MD)-like behavior, and the BFGS, LBFGS, BFGSLineSearch=QuasiNewton, and LBFGSLineSearch methods are provided as quasi-newton methods.

Algorithm |

Group |

Description |

|---|---|---|

MDMin |

MD-like |

Performs MD, but resets momentum to 0 if the inner product of momentum and force is negative |

FIRE |

MD-like |

MD, with various additional innovations to make it fast and robust. |

BFGS |

Quasi-Newton |

Approximate the Hessian from the optimization trajectory and run the Newton method using the approximated Hessian |

LBFGS |

Quasi-Newton |

BFGS method to run at high speed and low memory |

BFGSLineSearch |

Quasi-Newton |

BFGS determines the direction of optimization direction, and LineSearch determines the step size. |

LBFGSLineSearch |

Quasi-Newton |

LBFGS determines the direction of optimization direction, and LineSearch determines the step size. |

We exclude MDMin from the benchmark because of its large hyperparameter dependence and frequent structural optimization failures. Let’s look at other optimizers.

FIRE¶

FIRE basically performs MD, but some ingenious techniques are included for fast convergence.

Newton method¶

The Newton method uses the Hessian and gradient to determine the next step. The Newton method is a quadratic convergence method, which means that when the potential is close to a quadratic function (close to the minima), it approaches the exact solution at the speed of the square and is very fast. With the Hessian as \(H\) and the gradient as \(\vec{g}\), the next optimization step \(\vec{p}\) is expressed as follows

\(\vec{p} = - H^{-1} \vec{g}\)

If the potential were strictly quadratic, it would converge to a minimum in one step.

Quasi-Newton Method¶

Since the evaluation of the Hessian is onerous (for example, it can be obtained numerically by computing force 6N times), it is often more meaningful to use the time spent computing the Hessian to proceed with structural optimization. Therefore, using the optimization trajectory, a quasi-Newton method approximates the Hessian in a short time. Since the Hessian is not exact, it does not converge in as few steps as the Newton method but still converges fast.

BFGS¶

The BFGS method is the most standard Hessian approximation method among the quasi-Newtonian methods. Various other methods are known, such as the SR1 and DFP methods, but the BFGS method is implemented in ASE.

LBFGS¶

One drawback of the BFGS method is that it requires storing a matrix of the size of the variable’s dimension to optimize and calculate the matrix-vector product. This means \(O(N^2)\) for dimension \(N\) in space and \(O(N^2)\) in time are required, which is not suitable for optimizing multidimensional functions. Therefore, the LBFGS method is a method that can reproduce BFGS with a computational complexity of \(O(N)\) by using only the information from the last few trajectories and transforming it so that the results are almost the same as the BFGS method. Since the computational complexity of PFP is \(O(N)\), the computational time in Optimizer becomes noticeable from about 300 atoms using the \(O(N^2)\) method like BFGS. However, in LBFGS, the computational time in optimizer is not noticeable even if the number of atoms increases.

LineSearch¶

If the potential is not quadratic, the Newton method may oscillate. Also, the quasi-Newton method may not provide an appropriate step size because the Hessian may not be accurate. In these cases, LineSearch can be used to stabilize the optimization in order to converge the optimization. After determining the direction of optimization using the quasi-Newton method, it is desirable to decide a step size such that the energy is sufficiently small in that direction. LineSearch determines such a step size by actually calculating several points. With BFGS, there may be cases where the energy increases as a result of calculation according to the Newton method, but BFGSLineSearch searches for a good step size.

Benchmark¶

[1]:

import os

from time import perf_counter

from typing import Optional, Type

import matplotlib.pyplot as plt

import pandas as pd

from ase import Atoms

from ase.build import bulk, molecule

from ase.calculators.calculator import Calculator

from ase.io import Trajectory

from ase.optimize import BFGS, FIRE, LBFGS, BFGSLineSearch, LBFGSLineSearch, MDMin

from ase.optimize.optimize import Optimizer

from tqdm.auto import tqdm

import pfp_api_client

from pfcc_extras.structure.ase_rdkit_converter import atoms_to_smiles, smiles_to_atoms

from pfcc_extras.visualize.view import view_ngl

from pfp_api_client.pfp.calculators.ase_calculator import ASECalculator

from pfp_api_client.pfp.estimator import Estimator, EstimatorCalcMode

[2]:

calc = ASECalculator(Estimator(calc_mode=EstimatorCalcMode.PBE, model_version="v8.0.0"))

calc_mol = ASECalculator(Estimator(calc_mode=EstimatorCalcMode.WB97XD, model_version="v8.0.0"))

print(f"pfp_api_client: {pfp_api_client.__version__}")

print(calc.estimator.model_version)

pfp_api_client: 1.23.1

v8.0.0

Comparison of number of steps (force calculation)¶

Below is a simple example of a structural optimization of a CH3CHO molecule. The structure obtained by molecule("CH3CHO") is not strictly stable, but it is a fairly good initial structure. Therefore, when starting from such a structure, the quasi-Newton method can be used to complete the structural optimization in a much smaller number of PFP calls.

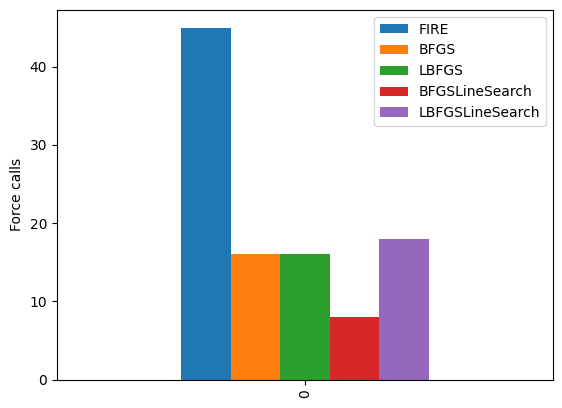

First, let’s benchmark how many times force is calculated until the structural optimization is complete. Here, we take the structure of the bulk of Pt moved randomly as the initial structure and measure the number of force calculations until the optimal structure is achieved.

[3]:

def get_force_calls(opt: Optimizer) -> int:

"""Obtrain number of force calculations"""

if isinstance(opt, (BFGSLineSearch, LBFGSLineSearch)):

return opt.force_calls

else:

return opt.nsteps

[4]:

os.makedirs("output", exist_ok=True)

[5]:

atoms_0 = bulk("Pt") * (4, 4, 4)

atoms_0.rattle(stdev=0.1)

[6]:

view_ngl(atoms_0)

[6]:

[7]:

force_calls = {}

for opt_class in tqdm([FIRE, BFGS, LBFGS, BFGSLineSearch, LBFGSLineSearch]):

atoms = atoms_0.copy()

atoms.calc = calc

name = opt_class.__name__

with opt_class(atoms, trajectory=f"output/{name}_ch3cho.traj", logfile=None) as opt:

opt.run(fmax=0.05)

force_calls[name] = [get_force_calls(opt)]

[8]:

df = pd.DataFrame.from_dict(force_calls)

df.plot.bar()

plt.ylabel("Force calls")

plt.show()

FIRE had the highest number of force evaluation, while BFGS and LBFGS had about the same number. The method with LineSearch also had the same number of force evaluations as BFGS and LBFGS.

Comparison of speed per step¶

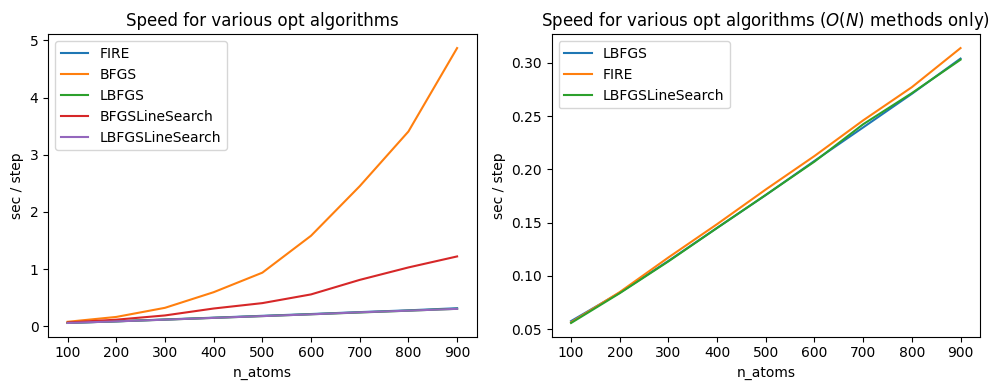

Next, let us compare the speed per step of each of the optimization methods.

[9]:

from ase.build import bulk

atoms = bulk("Fe") * (10, 5, 3)

atoms.rattle(stdev=0.2)

atoms.calc = calc

view_ngl(atoms)

[9]:

[10]:

def opt_benchmark(atoms: Atoms, calculator: Calculator, opt_class, logfile: Optional[str] = "-", steps: int = 10) -> float:

_atoms = atoms.copy()

_atoms.calc = calculator

with opt_class(_atoms, logfile=logfile) as opt:

start_time = perf_counter()

opt.run(steps=steps)

end_time = perf_counter()

duration = end_time - start_time

duration_per_force_calls = duration / get_force_calls(opt)

return duration_per_force_calls

[11]:

result_dict = {

"n_atoms": [],

"FIRE": [],

"BFGS": [],

"LBFGS": [],

"BFGSLineSearch": [],

"LBFGSLineSearch": [],

}

for i in range(1, 10):

atoms = bulk("Fe") * (10, 10, i)

atoms.rattle(stdev=0.2)

n_atoms = atoms.get_global_number_of_atoms()

result_dict["n_atoms"].append(n_atoms)

print(f"----- n_atoms {n_atoms} -----")

for opt_class in [FIRE, BFGS, LBFGS, BFGSLineSearch, LBFGSLineSearch]:

name = opt_class.__name__

print(f"Running {name}...")

duration = opt_benchmark(atoms, calc, opt_class=opt_class, logfile=None, steps=10)

print(f"Done in {duration:.2f} sec.")

result_dict[name].append(duration)

----- n_atoms 100 -----

Running FIRE...

Done in 0.10 sec.

Running BFGS...

Done in 0.10 sec.

Running LBFGS...

Done in 0.09 sec.

Running BFGSLineSearch...

Done in 0.08 sec.

Running LBFGSLineSearch...

Done in 0.08 sec.

----- n_atoms 200 -----

Running FIRE...

Done in 0.09 sec.

Running BFGS...

Done in 0.15 sec.

Running LBFGS...

Done in 0.09 sec.

Running BFGSLineSearch...

Done in 0.11 sec.

Running LBFGSLineSearch...

Done in 0.09 sec.

----- n_atoms 300 -----

Running FIRE...

Done in 0.13 sec.

Running BFGS...

Done in 0.29 sec.

Running LBFGS...

Done in 0.15 sec.

Running BFGSLineSearch...

Done in 0.18 sec.

Running LBFGSLineSearch...

Done in 0.10 sec.

----- n_atoms 400 -----

Running FIRE...

Done in 0.08 sec.

Running BFGS...

Done in 0.42 sec.

Running LBFGS...

Done in 0.10 sec.

Running BFGSLineSearch...

Done in 0.19 sec.

Running LBFGSLineSearch...

Done in 0.08 sec.

----- n_atoms 500 -----

Running FIRE...

Done in 0.10 sec.

Running BFGS...

Done in 0.73 sec.

Running LBFGS...

Done in 0.09 sec.

Running BFGSLineSearch...

Done in 0.26 sec.

Running LBFGSLineSearch...

Done in 0.10 sec.

----- n_atoms 600 -----

Running FIRE...

Done in 0.10 sec.

Running BFGS...

Done in 1.28 sec.

Running LBFGS...

Done in 0.10 sec.

Running BFGSLineSearch...

Done in 0.40 sec.

Running LBFGSLineSearch...

Done in 0.11 sec.

----- n_atoms 700 -----

Running FIRE...

Done in 0.14 sec.

Running BFGS...

Done in 2.03 sec.

Running LBFGS...

Done in 0.11 sec.

Running BFGSLineSearch...

Done in 0.61 sec.

Running LBFGSLineSearch...

Done in 0.12 sec.

----- n_atoms 800 -----

Running FIRE...

Done in 0.14 sec.

Running BFGS...

Done in 3.07 sec.

Running LBFGS...

Done in 0.15 sec.

Running BFGSLineSearch...

Done in 0.84 sec.

Running LBFGSLineSearch...

Done in 0.14 sec.

----- n_atoms 900 -----

Running FIRE...

Done in 0.13 sec.

Running BFGS...

Done in 4.62 sec.

Running LBFGS...

Done in 0.12 sec.

Running BFGSLineSearch...

Done in 1.06 sec.

Running LBFGSLineSearch...

Done in 0.13 sec.

[12]:

import matplotlib.pyplot as plt

import pandas as pd

fig, axes = plt.subplots(1, 2, tight_layout=True, figsize=(10, 4))

df = pd.DataFrame(result_dict)

df = df.set_index("n_atoms")

df.plot(ax=axes[0])

axes[0].set_title("Speed for various opt algorithms")

df[["LBFGS", "FIRE", "LBFGSLineSearch"]].plot(ax=axes[1])

axes[1].set_title("Speed for various opt algorithms ($O(N)$ methods only)")

for ax in axes:

ax.set_ylabel("sec / step")

fig.savefig("output/opt_benchmark.png")

plt.show(fig)

In BFGS and BFGSLineSearch, the calculation time per step increases as the number of atoms increases.

The BFGS method implemented in ASE performs eigenvalue decomposition at each step to find the Hessian inverse matrix. Since the Hessian has a size of 3N, where N is the number of atoms, and the eigenvalue decomposition takes \(O(N^3)\) computational time, the BFGS method in ASE slows down rapidly on the order of the cube of the number of atoms.

BFGSLineSearch directly approximates the Hessian inverse matrix without performing Hessian eigenvalue decomposition. This makes it faster than BFGS, \(O(N^2)\) computational time because the matrix-vector product becomes the rate-limiting factor.

However, it is much slower than LBFGS and FIRE, which operate on \(O(N)\) when the number of atoms is large.

Use of different optimization algorithms¶

We have seen that FIRE requires more force calls than other methods, and that BFGS and BFGSLineSearch are more rate-limiting in the internal routines of the optimizer than in force evaluation when the number of atoms is large. So in what cases should we use LBFGS and LBFGSLineSearch? We will now look at some cases where even those algorithms do not work.

Examples of LBFGS and BFGS fail¶

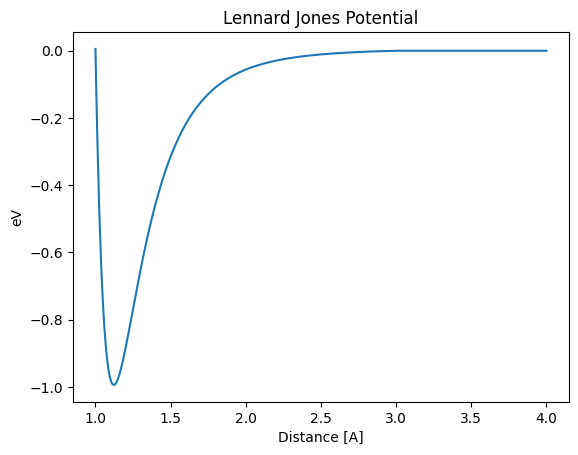

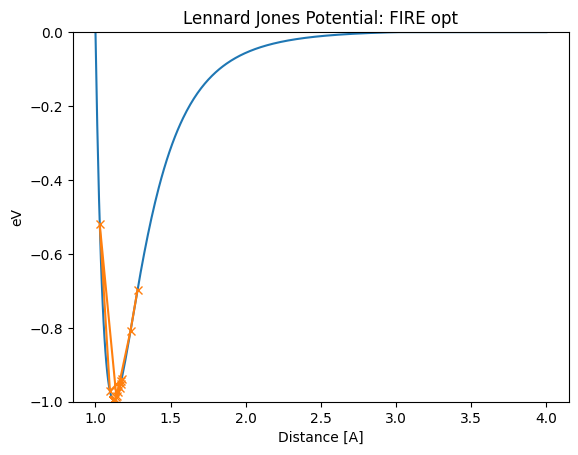

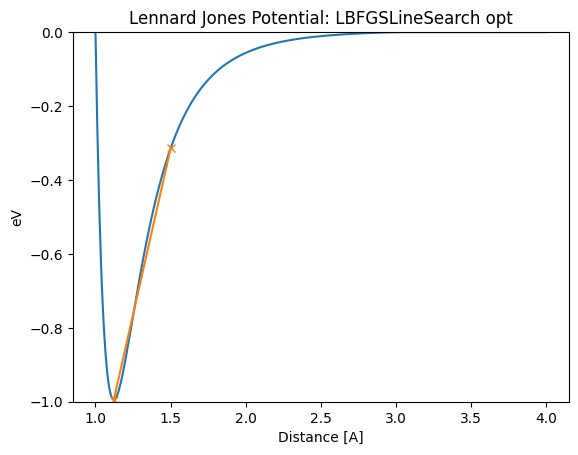

Let’s look at the next example. This example uses a very simple potential, the Lennard-Jones (LJ) potential, and performs structural optimization with initial values away from its stability point.

LJ potential is expressed as follows, and can be visualized as below

[13]:

from ase.calculators.lj import LennardJones

import numpy as np

calc_lj = LennardJones()

dists = np.linspace(1.0, 4.0, 500)

E_pot_list = []

for d in dists:

atoms = Atoms("Ar2", [[0, 0, 0], [d, 0, 0]])

atoms.calc = calc_lj

E_pot = atoms.get_potential_energy()

E_pot_list.append(E_pot)

plt.plot(dists, E_pot_list)

plt.title("Lennard Jones Potential")

plt.ylabel("eV")

plt.xlabel("Distance [A]")

plt.show()

Let’s run a structural optimization of this simple potential form by starting the distance away from the stability point, 1.12A.

[14]:

def lennard_jones_trajectory(opt_class: Type[Optimizer], d0: float):

name = opt_class.__name__

trajectory_path = f"output/Ar_{name}.traj"

atoms = Atoms("Ar2", [[0, 0, 0], [d0, 0, 0]])

atoms.calc = calc_lj

opt = opt_class(atoms, trajectory=trajectory_path)

opt.run()

distance_list = []

energy_list = []

for atoms in Trajectory(trajectory_path):

energy_list.append(atoms.get_potential_energy())

distance_list.append(atoms.get_distance(0, 1))

print("Distance in opt trajectory: ", distance_list)

plt.plot(dists, E_pot_list)

plt.plot(distance_list, energy_list, marker="x")

plt.title(f"Lennard Jones Potential: {name} opt")

plt.ylim(-1.0, 0)

plt.ylabel("eV")

plt.xlabel("Distance [A]")

plt.show()

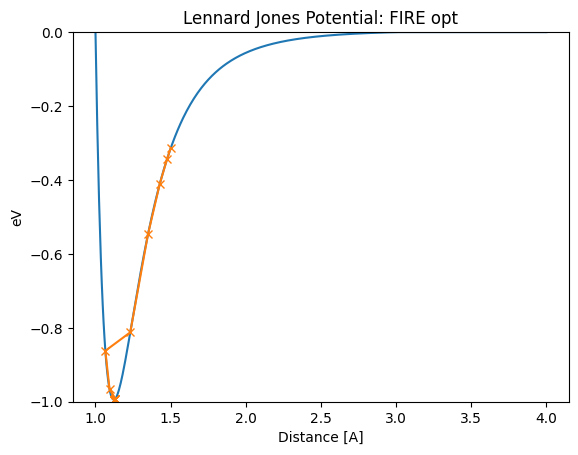

The first case is when FIRE is used. The optimum value of 1.12A is reached in a stable manner.

[15]:

lennard_jones_trajectory(FIRE, 1.5)

Step Time Energy fmax

FIRE: 0 04:30:07 -0.314857 1.158029

FIRE: 1 04:30:07 -0.342894 1.264380

FIRE: 2 04:30:07 -0.410024 1.512419

FIRE: 3 04:30:07 -0.546744 1.968308

FIRE: 4 04:30:07 -0.812180 2.383891

FIRE: 5 04:30:07 -0.862167 5.585844

FIRE: 6 04:30:07 -0.966377 2.149190

FIRE: 7 04:30:07 -0.991817 0.522302

FIRE: 8 04:30:07 -0.992148 0.491275

FIRE: 9 04:30:07 -0.992732 0.429940

FIRE: 10 04:30:07 -0.993427 0.339973

FIRE: 11 04:30:07 -0.994057 0.224455

FIRE: 12 04:30:07 -0.994451 0.088426

FIRE: 13 04:30:07 -0.994489 0.060610

FIRE: 14 04:30:07 -0.994490 0.059506

FIRE: 15 04:30:07 -0.994492 0.057320

FIRE: 16 04:30:07 -0.994495 0.054094

FIRE: 17 04:30:07 -0.994499 0.049889

Distance in opt trajectory: [1.5, 1.4768394233790767, 1.42839123774426, 1.349694678243147, 1.231631957294667, 1.0658914242047832, 1.0938206432760949, 1.132495812583008, 1.1318429355798734, 1.1305759647542164, 1.1287715691339621, 1.1265422074054834, 1.1240322773166505, 1.1214118150599879, 1.1214307556785812, 1.1214682920288386, 1.1215237409768002, 1.1215960942672192]

[16]:

lennard_jones_trajectory(FIRE, 1.28)

Step Time Energy fmax

FIRE: 0 04:30:08 -0.697220 2.324552

FIRE: 1 04:30:08 -0.807702 2.387400

FIRE: 2 04:30:08 -0.987241 0.821987

FIRE: 3 04:30:08 -0.520102 13.570402

FIRE: 4 04:30:08 -0.971689 1.903593

FIRE: 5 04:30:08 -0.939109 1.840004

FIRE: 6 04:30:08 -0.943288 1.793714

FIRE: 7 04:30:08 -0.951214 1.694594

FIRE: 8 04:30:08 -0.961964 1.529352

FIRE: 9 04:30:08 -0.974030 1.277952

FIRE: 10 04:30:08 -0.985244 0.914936

FIRE: 11 04:30:08 -0.992867 0.414203

FIRE: 12 04:30:08 -0.994043 0.239611

FIRE: 13 04:30:08 -0.994061 0.235001

FIRE: 14 04:30:08 -0.994095 0.225890

FIRE: 15 04:30:08 -0.994143 0.212487

FIRE: 16 04:30:08 -0.994201 0.195100

FIRE: 17 04:30:08 -0.994265 0.174121

FIRE: 18 04:30:08 -0.994330 0.150013

FIRE: 19 04:30:08 -0.994391 0.123296

FIRE: 20 04:30:08 -0.994449 0.091418

FIRE: 21 04:30:08 -0.994495 0.054286

FIRE: 22 04:30:08 -0.994519 0.012221

Distance in opt trajectory: [1.28, 1.2335089629222111, 1.1392699328939198, 1.0285911631178963, 1.0964431714345662, 1.1738131464936081, 1.1715131413850235, 1.1669709941864936, 1.160310604717144, 1.1517385248429906, 1.1415690055780352, 1.130255815977825, 1.118424872251103, 1.1184997505580012, 1.1186480666965142, 1.1188669733107373, 1.1191522821112356, 1.1194985597723826, 1.1198992503739216, 1.1203468201911215, 1.1208857684008715, 1.121520438052656, 1.1222486280893154]

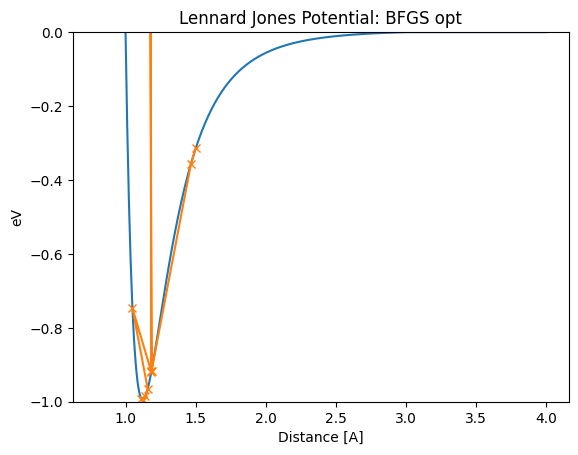

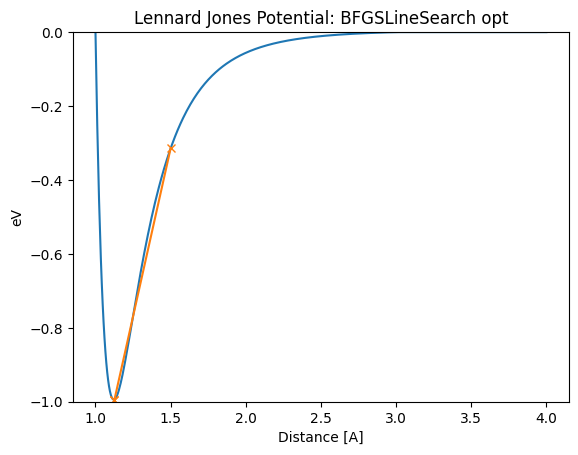

If we start the BFGS method at 1.5A, we will find that it jumps too far at certain steps, resulting in unstable behavior that jumps far past the stability point. (where it seems to jump from 1.18A to 0.78A.) Thus, the distance will oscillate significantly before reaching the optimum value of 1.12A, even in this simple example. When the function surface is very different from the second-order function and the second-order approximation method is used, its estimation of minima is far from the actual minima.

[17]:

lennard_jones_trajectory(BFGS, 1.5)

Step Time Energy fmax

BFGS: 0 04:30:08 -0.314857 1.158029

BFGS: 1 04:30:08 -0.355681 1.312428

BFGS: 2 04:30:08 -0.916039 2.041011

BFGS: 3 04:30:08 55.304434 974.487533

BFGS: 4 04:30:08 -0.917740 2.028761

BFGS: 5 04:30:08 -0.919418 2.016337

BFGS: 6 04:30:08 -0.747080 8.517609

BFGS: 7 04:30:08 -0.965068 1.472894

BFGS: 8 04:30:08 -0.984660 0.939655

BFGS: 9 04:30:08 -0.992324 0.528593

BFGS: 10 04:30:08 -0.994444 0.092418

BFGS: 11 04:30:08 -0.994520 0.007274

Distance in opt trajectory: [1.5, 1.4669134619701096, 1.1856710148074785, 0.7856710148074794, 1.1848349876531552, 1.1840057048694352, 1.0494144415981015, 1.1582431525834522, 1.1421985974452062, 1.1139254943723917, 1.1241042745909042, 1.1225894828343739]

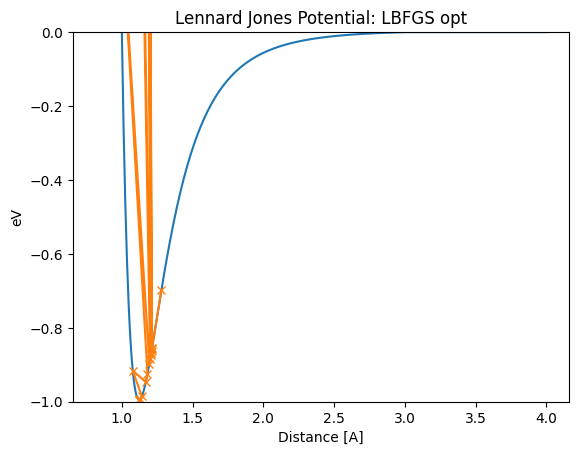

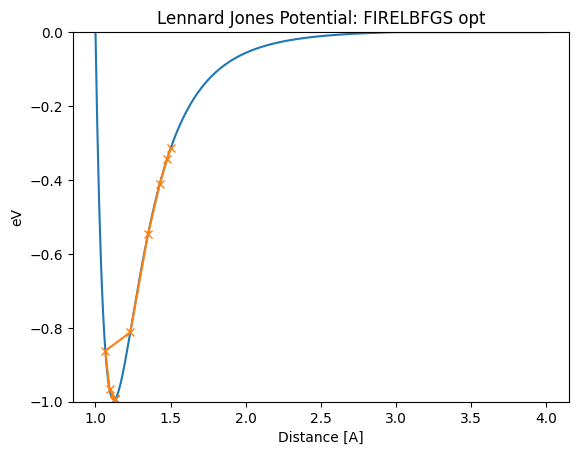

The same thing happens with the LBFGS method. If such oscillations occur in a polyatomic system, the optimization may not be completed for a long time.

[18]:

lennard_jones_trajectory(LBFGS, 1.28)

Step Time Energy fmax

LBFGS: 0 04:30:08 -0.697220 2.324552

LBFGS: 1 04:30:08 -0.854683 2.314904

LBFGS: 2 04:30:08 33.771592 599.751912

LBFGS: 3 04:30:08 -0.858237 2.305680

LBFGS: 4 04:30:08 -0.861748 2.295944

LBFGS: 5 04:30:08 17.305333 318.491824

LBFGS: 6 04:30:08 -0.867638 2.278148

LBFGS: 7 04:30:08 -0.873395 2.258876

LBFGS: 8 04:30:08 5.604073 121.154807

LBFGS: 9 04:30:08 -0.885566 2.211348

LBFGS: 10 04:30:08 -0.897001 2.157091

LBFGS: 11 04:30:08 0.369118 30.787165

LBFGS: 12 04:30:08 -0.925197 1.970726

LBFGS: 13 04:30:08 -0.947015 1.749104

LBFGS: 14 04:30:08 -0.915603 4.009032

LBFGS: 15 04:30:08 -0.985429 0.906882

LBFGS: 16 04:30:08 -0.993163 0.377052

LBFGS: 17 04:30:08 -0.994465 0.080647

LBFGS: 18 04:30:08 -0.994520 0.005364

Distance in opt trajectory: [1.28, 1.213584232746016, 0.813584232746016, 1.2120462614888505, 1.2105202846657441, 0.8506725019776178, 1.2079447803538972, 1.2054073889508412, 0.9079986088524912, 1.1999638317862706, 1.1947303311793407, 0.9866659932915066, 1.1811069055371053, 1.169409273526655, 1.0770879993440852, 1.1413655567592533, 1.1295077111909069, 1.1210690913427286, 1.1225559889249785]

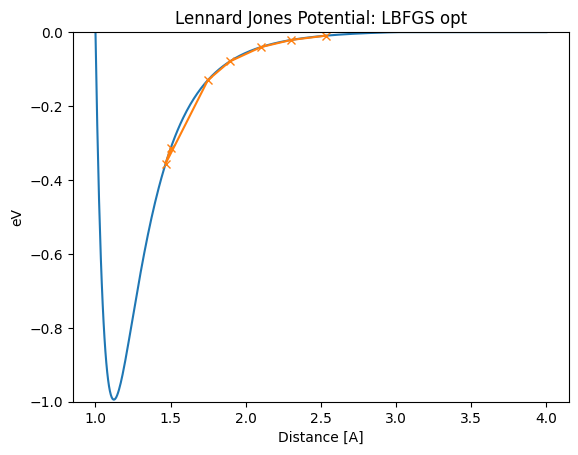

There are also other cases where the initial value does not reach the stability point correctly if it starts far from the stability point. This may be caused by a problem with the initialization of Hessian or other reasons.

[19]:

lennard_jones_trajectory(LBFGS, 1.50)

Step Time Energy fmax

LBFGS: 0 04:30:09 -0.314857 1.158029

LBFGS: 1 04:30:09 -0.355681 1.312428

LBFGS: 2 04:30:09 -0.129756 0.447300

LBFGS: 3 04:30:09 -0.079409 0.263016

LBFGS: 4 04:30:09 -0.040472 0.129677

LBFGS: 5 04:30:09 -0.021155 0.068924

LBFGS: 6 04:30:09 -0.009646 0.035707

Distance in opt trajectory: [1.5, 1.4669134619701096, 1.748155909132741, 1.8935676545167726, 2.101103976550205, 2.302941039822042, 2.531922335240822]

Thus, even in cases where BFGS and LBFGS do not work, LineSearch can be used to converge correctly. LBFGSLineSearch is a better optimizer in most cases.

[20]:

lennard_jones_trajectory(LBFGSLineSearch, 1.5)

Step Time Energy fmax

LBFGSLineSearch: 0 04:30:09 -0.314857 1.158029

LBFGSLineSearch: 1 04:30:09 -0.994517 0.021446

Distance in opt trajectory: [1.5, 1.1220880716401869]

LBFGSLineSearch appears to finish in a few steps, but the actual number of calculations is a bit higher because the part of LineSearch being done internally is not counted as a step. BFGSLineSearch shows Step[FC], where FC is the actual number of force evaluations.

[21]:

lennard_jones_trajectory(BFGSLineSearch, 1.5)

Step[ FC] Time Energy fmax

BFGSLineSearch: 0[ 0] 04:30:09 -0.314857 1.1580

BFGSLineSearch: 1[ 5] 04:30:09 -0.994057 0.2244

BFGSLineSearch: 2[ 7] 04:30:09 -0.994520 0.0054

Distance in opt trajectory: [1.5, 1.1265420003736115, 1.1225561293552997]

We have seen here that there are examples where BFGS and LBFGS do not work. But even in these instances, LBFGSLineSearch has worked well.

Our conclusion so far is that LBFGSLineSearch is the best optimizer in many cases. Below we discuss the shortcomings of the other optimizers.

FIRE: Long number of steps to convergence

BFGS: Takes a long time when the number of atoms is large.

BFGSLineSearch: Takes a long time when the number of atoms is large.

LBFGS: May oscillate or increase energy sometimes.

However, it is not always the case that using LBFGSLineSearch always works, unfortunately.

Example of LBFGSLineSearch fails¶

LBFGSLineSearch is a complete method, and it will work well in many cases. However, there are rare cases where LBFGSLineSearch does not work well. For example, this is often the case when one wants to perform precise structural optimization with a small fmax. If you want to optimize the rotation of the methyl group of toluene, you need to set fmax=0.001 eV/A to reach the minimum value for the rotation. In this example, LineSearch will not converge easily, and one step will take a

long time, or the energy will not drop, or in some cases, LineSearch will fail, and an error will occur with RuntimeError: LineSearch failed!

[22]:

atoms_0 = smiles_to_atoms("Cc1ccccc1")

tmp = atoms_0[7:10]

tmp.rotate([1.0, 0.0, 0.0], 15.0)

atoms_0.positions[7:10] = tmp.positions

atoms_0.calc = calc_mol

[23]:

view_ngl(atoms_0, representations=["ball+stick"], h=600, w=600)

[23]:

[24]:

# Please set '-', if you want to see detailed logs.

logfile = None

steps = {}

images = []

for optimizer_class in (FIRE, LBFGS, LBFGSLineSearch):

name = optimizer_class.__name__

atoms = atoms_0.copy()

atoms.calc = calc_mol

with optimizer_class(atoms, logfile=logfile) as opt:

try:

print(f"{name} optimization starts.")

opt.run(fmax=0.001, steps=200)

print(f"Optimization finished without error. steps = {opt.nsteps}")

finally:

steps[name] = [get_force_calls(opt)]

images.append(atoms.copy())

FIRE optimization starts.

Optimization finished without error. steps = 134

LBFGS optimization starts.

Optimization finished without error. steps = 47

LBFGSLineSearch optimization starts.

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Cell In[24], line 13

11 try:

12 print(f"{name} optimization starts.")

---> 13 opt.run(fmax=0.001, steps=200)

14 print(f"Optimization finished without error. steps = {opt.nsteps}")

15 finally:

File ~/.py311/lib/python3.11/site-packages/ase/optimize/optimize.py:417, in Optimizer.run(self, fmax, steps)

402 """Run optimizer.

403

404 Parameters

(...)

414 True if the forces on atoms are converged.

415 """

416 self.fmax = fmax

--> 417 return Dynamics.run(self, steps=steps)

File ~/.py311/lib/python3.11/site-packages/ase/optimize/optimize.py:286, in Dynamics.run(self, steps)

268 def run(self, steps=DEFAULT_MAX_STEPS):

269 """Run dynamics algorithm.

270

271 This method will return when the forces on all individual

(...)

283 True if the forces on atoms are converged.

284 """

--> 286 for converged in Dynamics.irun(self, steps=steps):

287 pass

288 return converged

File ~/.py311/lib/python3.11/site-packages/ase/optimize/optimize.py:257, in Dynamics.irun(self, steps)

254 # run the algorithm until converged or max_steps reached

255 while not is_converged and self.nsteps < self.max_steps:

256 # compute the next step

--> 257 self.step()

258 self.nsteps += 1

260 # log the step

File ~/.py311/lib/python3.11/site-packages/ase/optimize/lbfgs.py:158, in LBFGS.step(self, forces)

156 if self.use_line_search is True:

157 e = self.func(pos)

--> 158 self.line_search(pos, g, e)

159 dr = (self.alpha_k * self.p).reshape(len(self.optimizable), -1)

160 else:

File ~/.py311/lib/python3.11/site-packages/ase/optimize/lbfgs.py:251, in LBFGS.line_search(self, r, g, e)

246 self.alpha_k, e, self.e0, self.no_update = \

247 ls._line_search(self.func, self.fprime, r, self.p, g, e, self.e0,

248 maxstep=self.maxstep, c1=.23,

249 c2=.46, stpmax=50.)

250 if self.alpha_k is None:

--> 251 raise RuntimeError('LineSearch failed!')

RuntimeError: LineSearch failed!

[25]:

steps["index"] = "nsteps"

df = pd.DataFrame(steps)

df = df.set_index("index")

df

[25]:

| FIRE | LBFGS | LBFGSLineSearch | |

|---|---|---|---|

| index | |||

| nsteps | 134 | 47 | 325 |

In this example, LBFGS converged, but FIRE did not.

Also, LBFGSLineSearch took a very long time per step in the latter half and did not converge, in some cases giving the error RuntimeError: LineSearch failed!. In most cases, LBFGSLineSearch has difficulty converging around fmax=0.002 eV/A. In some cases, even fmax=0.05 may not be reached if the shape of the potential is worse.

If LBFGSLineSearch stops working like this, you may have to re-run LBFGSLineSearch to get it to work. (Sometimes it doesn’t work.)

[26]:

with LBFGSLineSearch(atoms) as opt:

opt.run(0.001)

Step Time Energy fmax

LBFGSLineSearch: 0 04:31:43 -72.229813 0.002213

LBFGSLineSearch: 1 04:31:44 -72.229811 0.002213

LBFGSLineSearch: 2 04:31:44 -72.229812 0.000925

[27]:

view_ngl(images, representations=["ball+stick"])

[27]:

Implementation of optimization algorithms based on the above findings¶

LBFGSLineSearch is a good optimizer, but it can be very slow with small fmax, and in some cases, it can also produce errors. FIRE is often slower than LBFGSLineSearch, but it is robust in many cases. However, it may not converge easily when fmax is small. LBFGS converges even with small fmax, but in some cases, it may oscillate or increase its energy, as seen in the Lennard-Jones example.

Heuristically, we want to use the second-order Newton method with good convergence close to the stability point where the quadratic function approximation is a good approximation. And we adopt LBFGS since LBFGSLineSearch is not numerically stable in some cases. For unstable structures where the quadratic approximation is not a good approximation, we adopt FIRE or LBFGSLineSearch to drop energy robustly.

However, it is difficult to predict at what fmax cases LBFGSLineSearch will not be numerically stable. FIRE is more stable when the method is applied uniformly to unknown materials, such as in high-throughput calculations.

To summarize our findings so far, the optimization algorithms that seem to work well heuristically are

In unstable places, such as the early stages of structural optimization, use FIRE to move down the gradient.

Once a certain point of stability is reached (small values of force, etc.), the LBFGS method is used to attempt fast convergence.

This algorithm is implemented as ``FIRELBFGS`` optimizer in matlantis-features.

[28]:

from matlantis_features.ase_ext.optimize import FIRELBFGS

First, let’s look at an example where LBFGSLineSearch does not work: the rotation of the methyl group of toluene. FIRELBFGS works in these examples and converges robustly, slower than LBFGS but much faster than FIRE.

[29]:

atoms_0 = smiles_to_atoms("Cc1ccccc1")

tmp = atoms_0[7:10]

tmp.rotate([1.0, 0.0, 0.0], 15.0)

atoms_0.positions[7:10] = tmp.positions

atoms_0.calc = calc_mol

[30]:

view_ngl(atoms_0, representations=["ball+stick"], h=600, w=600)

[30]:

[31]:

steps = {}

images = []

atoms = atoms_0.copy()

atoms.calc = calc_mol

with FIRELBFGS(atoms, logfile="-") as opt:

try:

opt.run(fmax=0.001, steps=200)

finally:

steps[name] = [get_force_calls(opt)]

images.append(atoms.copy())

Step Time Energy fmax

FIRELBFGS: 0 04:31:58 -72.073380 1.815006

FIRELBFGS: 1 04:31:58 -72.146437 0.786663

FIRELBFGS: 2 04:31:58 -72.152324 1.287662

FIRELBFGS: 3 04:31:58 -72.164941 1.010978

FIRELBFGS: 4 04:31:58 -72.179629 0.534161

FIRELBFGS: 5 04:31:58 -72.186041 0.417720

FIRELBFGS: 6 04:31:59 -72.185550 0.641442

FIRELBFGS: 7 04:31:59 -72.186688 0.596239

FIRELBFGS: 8 04:31:59 -72.188711 0.510811

FIRELBFGS: 9 04:31:59 -72.191216 0.396012

FIRELBFGS: 10 04:31:59 -72.193697 0.310661

FIRELBFGS: 11 04:31:59 -72.195816 0.273045

FIRELBFGS: 12 04:31:59 -72.197418 0.261057

FIRELBFGS: 13 04:31:59 -72.198668 0.275473

FIRELBFGS: 14 04:31:59 -72.199987 0.332302

FIRELBFGS: 15 04:31:59 -72.201756 0.344170

FIRELBFGS: 16 04:31:59 -72.204250 0.328235

FIRELBFGS: 17 04:31:59 -72.207430 0.321986

FIRELBFGS: 18 04:31:59 -72.210841 0.250041

FIRELBFGS: 19 04:31:59 -72.213976 0.221368

FIRELBFGS: 20 04:31:59 -72.216760 0.231969

FIRELBFGS: 21 04:32:00 -72.219344 0.216033

FIRELBFGS: 22 04:32:00 -72.221568 0.253937

FIRELBFGS: 23 04:32:00 -72.223446 0.181132

FIRELBFGS: 24 04:32:00 -72.224756 0.140055

FIRELBFGS: 25 04:32:00 -72.224731 0.216246

FIRELBFGS: 26 04:32:00 -72.224983 0.187434

FIRELBFGS: 27 04:32:00 -72.225375 0.134279

FIRELBFGS: 28 04:32:00 -72.225718 0.080261

FIRELBFGS: 29 04:32:00 -72.225929 0.074791

FIRELBFGS: 30 04:32:00 -72.226054 0.096927

FIRELBFGS: 31 04:32:00 -72.226209 0.129175

FIRELBFGS: 32 04:32:00 -72.226446 0.142852

FIRELBFGS: 33 04:32:00 -72.226774 0.124897

FIRELBFGS: 34 04:32:01 -72.227105 0.071352

FIRELBFGS: 35 04:32:01 -72.227345 0.059043

FIRELBFGS: 36 04:32:01 -72.227510 0.087459

FIRELBFGS: 37 04:32:01 -72.227620 0.119941

FIRELBFGS: 38 04:32:01 -72.227739 0.086041

FIRELBFGS: 39 04:32:01 -72.227887 0.044879

FIRELBFGS: 40 04:32:01 -72.227913 0.072795

FIRELBFGS: 41 04:32:01 -72.227960 0.062329

FIRELBFGS: 42 04:32:01 -72.228017 0.050785

FIRELBFGS: 43 04:32:01 -72.228083 0.037123

FIRELBFGS: 44 04:32:01 -72.228135 0.028326

FIRELBFGS: 45 04:32:01 -72.228171 0.047616

FIRELBFGS: 46 04:32:01 -72.228226 0.060909

FIRELBFGS: 47 04:32:02 -72.228285 0.061936

FIRELBFGS: 48 04:32:02 -72.228365 0.048574

FIRELBFGS: 49 04:32:02 -72.228440 0.021797

FIRELBFGS: 50 04:32:02 -72.228510 0.026017

FIRELBFGS: 51 04:32:02 -72.228564 0.039423

FIRELBFGS: 52 04:32:02 -72.228630 0.037856

FIRELBFGS: 53 04:32:02 -72.228710 0.022095

FIRELBFGS: 54 04:32:02 -72.228780 0.031217

FIRELBFGS: 55 04:32:02 -72.228868 0.038054

FIRELBFGS: 56 04:32:02 -72.229004 0.018406

FIRELBFGS: 57 04:32:02 -72.229129 0.036774

FIRELBFGS: 58 04:32:02 -72.229271 0.016861

FIRELBFGS: 59 04:32:02 -72.229288 0.013714

FIRELBFGS: 60 04:32:02 -72.229307 0.013447

FIRELBFGS: 61 04:32:02 -72.229422 0.034591

FIRELBFGS: 62 04:32:03 -72.229462 0.020173

FIRELBFGS: 63 04:32:03 -72.229499 0.019644

FIRELBFGS: 64 04:32:03 -72.229550 0.022996

FIRELBFGS: 65 04:32:03 -72.229630 0.038037

FIRELBFGS: 66 04:32:03 -72.229697 0.030483

FIRELBFGS: 67 04:32:03 -72.229726 0.014026

FIRELBFGS: 68 04:32:03 -72.229747 0.010100

FIRELBFGS: 69 04:32:03 -72.229752 0.011032

FIRELBFGS: 70 04:32:03 -72.229759 0.008812

FIRELBFGS: 71 04:32:03 -72.229761 0.006100

FIRELBFGS: 72 04:32:03 -72.229770 0.006390

FIRELBFGS: 73 04:32:03 -72.229773 0.010474

FIRELBFGS: 74 04:32:04 -72.229781 0.011869

FIRELBFGS: 75 04:32:04 -72.229788 0.008293

FIRELBFGS: 76 04:32:04 -72.229798 0.009606

FIRELBFGS: 77 04:32:04 -72.229800 0.008666

FIRELBFGS: 78 04:32:04 -72.229804 0.009679

FIRELBFGS: 79 04:32:04 -72.229811 0.005932

FIRELBFGS: 80 04:32:04 -72.229810 0.002231

FIRELBFGS: 81 04:32:04 -72.229806 0.001912

FIRELBFGS: 82 04:32:04 -72.229811 0.001751

FIRELBFGS: 83 04:32:04 -72.229817 0.001787

FIRELBFGS: 84 04:32:04 -72.229809 0.001258

FIRELBFGS: 85 04:32:05 -72.229813 0.000466

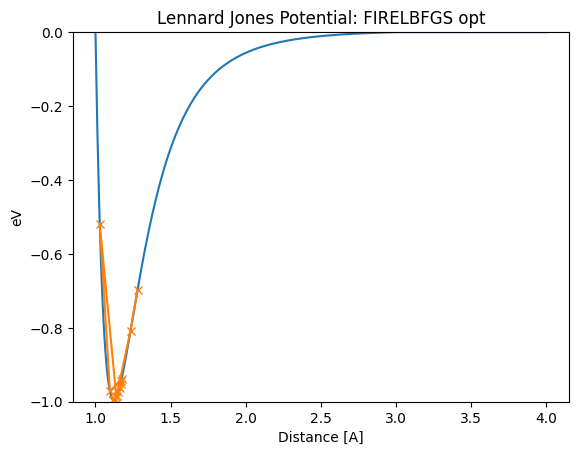

Next, let’s look at an example of an Ar diatomic that has oscillated significantly or climbed a potential in LBFGS. Even in this example, the system converges robustly without breaking down.

[32]:

lennard_jones_trajectory(FIRELBFGS, 1.5)

Step Time Energy fmax

FIRELBFGS: 0 04:32:07 -0.314857 1.158029

FIRELBFGS: 1 04:32:07 -0.342894 1.264380

FIRELBFGS: 2 04:32:07 -0.410024 1.512419

FIRELBFGS: 3 04:32:07 -0.546744 1.968308

FIRELBFGS: 4 04:32:07 -0.812180 2.383891

FIRELBFGS: 5 04:32:07 -0.862167 5.585844

FIRELBFGS: 6 04:32:07 -0.966377 2.149190

FIRELBFGS: 7 04:32:07 -0.991817 0.522302

FIRELBFGS: 8 04:32:07 -0.992148 0.491275

FIRELBFGS: 9 04:32:07 -0.992732 0.429940

FIRELBFGS: 10 04:32:07 -0.993427 0.339973

FIRELBFGS: 11 04:32:07 -0.994057 0.224455

FIRELBFGS: 12 04:32:07 -0.994451 0.088426

FIRELBFGS: 13 04:32:07 -0.994489 0.060610

FIRELBFGS: 14 04:32:07 -0.994490 0.059506

FIRELBFGS: 15 04:32:07 -0.994492 0.057320

FIRELBFGS: 16 04:32:07 -0.994495 0.054094

FIRELBFGS: 17 04:32:07 -0.994499 0.049889

Distance in opt trajectory: [1.5, 1.4768394233790767, 1.42839123774426, 1.349694678243147, 1.231631957294667, 1.0658914242047832, 1.0938206432760949, 1.132495812583008, 1.1318429355798734, 1.1305759647542164, 1.1287715691339621, 1.1265422074054834, 1.1240322773166505, 1.1214118150599879, 1.1214307556785812, 1.1214682920288386, 1.1215237409768002, 1.1215960942672192]

[33]:

lennard_jones_trajectory(FIRELBFGS, 1.28)

Step Time Energy fmax

FIRELBFGS: 0 04:32:08 -0.697220 2.324552

FIRELBFGS: 1 04:32:08 -0.807702 2.387400

FIRELBFGS: 2 04:32:08 -0.987241 0.821987

FIRELBFGS: 3 04:32:08 -0.520102 13.570402

FIRELBFGS: 4 04:32:08 -0.971689 1.903593

FIRELBFGS: 5 04:32:08 -0.939109 1.840004

FIRELBFGS: 6 04:32:08 -0.943288 1.793714

FIRELBFGS: 7 04:32:08 -0.951214 1.694594

FIRELBFGS: 8 04:32:08 -0.961964 1.529352

FIRELBFGS: 9 04:32:08 -0.974030 1.277952

FIRELBFGS: 10 04:32:08 -0.985244 0.914936

FIRELBFGS: 11 04:32:08 -0.992867 0.414203

FIRELBFGS: 12 04:32:08 -0.994043 0.239611

FIRELBFGS: 13 04:32:08 -0.994061 0.235001

FIRELBFGS: 14 04:32:08 -0.994095 0.225890

FIRELBFGS: 15 04:32:08 -0.994143 0.212487

FIRELBFGS: 16 04:32:08 -0.994201 0.195100

FIRELBFGS: 17 04:32:08 -0.994265 0.174121

FIRELBFGS: 18 04:32:08 -0.994330 0.150013

FIRELBFGS: 19 04:32:08 -0.994391 0.123296

FIRELBFGS: 20 04:32:08 -0.994449 0.091418

FIRELBFGS: 21 04:32:08 -0.994495 0.054286

FIRELBFGS: 22 04:32:08 -0.994519 0.012221

Distance in opt trajectory: [1.28, 1.2335089629222111, 1.1392699328939198, 1.0285911631178963, 1.0964431714345662, 1.1738131464936081, 1.1715131413850235, 1.1669709941864936, 1.160310604717144, 1.1517385248429906, 1.1415690055780352, 1.130255815977825, 1.118424872251103, 1.1184997505580012, 1.1186480666965142, 1.1188669733107373, 1.1191522821112356, 1.1194985597723826, 1.1198992503739216, 1.1203468201911215, 1.1208857684008715, 1.121520438052656, 1.1222486280893154]

Further reading¶

The following books provide detailed explanations of the Newton method family, quasi-Newton methods, LineSearch, and other methods.

“Numerical Optimization” Jorge Nocedal, Stephan Wright https://doi.org/10.1007/978-0-387-40065-5

The original paper is the best source to understand FIRE.

You may also study algorithms by reading ASE implementations.

[34]:

from ase.optimize import FIRE

??FIRE

Init signature:

FIRE(

atoms: ase.atoms.Atoms,

restart: Optional[str] = None,

logfile: Union[IO, str] = '-',

trajectory: Optional[str] = None,

dt: float = 0.1,

maxstep: Optional[float] = None,

maxmove: Optional[float] = None,

dtmax: float = 1.0,

Nmin: int = 5,

finc: float = 1.1,

fdec: float = 0.5,

astart: float = 0.1,

fa: float = 0.99,

a: float = 0.1,

downhill_check: bool = False,

position_reset_callback: Optional[Callable] = None,

**kwargs,

)

Docstring: Base-class for all structure optimization classes.

Source:

class FIRE(Optimizer):

@deprecated(

"Use of `maxmove` is deprecated. Use `maxstep` instead.",

category=FutureWarning,

callback=_forbid_maxmove,

)

def __init__(

self,

atoms: Atoms,

restart: Optional[str] = None,

logfile: Union[IO, str] = '-',

trajectory: Optional[str] = None,

dt: float = 0.1,

maxstep: Optional[float] = None,

maxmove: Optional[float] = None,

dtmax: float = 1.0,

Nmin: int = 5,

finc: float = 1.1,

fdec: float = 0.5,

astart: float = 0.1,

fa: float = 0.99,

a: float = 0.1,

downhill_check: bool = False,

position_reset_callback: Optional[Callable] = None,

**kwargs,

):

"""

Parameters

----------

atoms: :class:`~ase.Atoms`

The Atoms object to relax.

restart: str

JSON file used to store hessian matrix. If set, file with

such a name will be searched and hessian matrix stored will

be used, if the file exists.

logfile: file object or str

If *logfile* is a string, a file with that name will be opened.

Use '-' for stdout.

trajectory: str

Trajectory file used to store optimisation path.

dt: float

Initial time step. Defualt value is 0.1

maxstep: float

Used to set the maximum distance an atom can move per

iteration (default value is 0.2).

dtmax: float

Maximum time step. Default value is 1.0

Nmin: int

Number of steps to wait after the last time the dot product of

the velocity and force is negative (P in The FIRE article) before

increasing the time step. Default value is 5.

finc: float

Factor to increase the time step. Default value is 1.1

fdec: float

Factor to decrease the time step. Default value is 0.5

astart: float

Initial value of the parameter a. a is the Coefficient for

mixing the velocity and the force. Called alpha in the FIRE article.

Default value 0.1.

fa: float

Factor to decrease the parameter alpha. Default value is 0.99

a: float

Coefficient for mixing the velocity and the force. Called

alpha in the FIRE article. Default value 0.1.

downhill_check: bool

Downhill check directly compares potential energies of subsequent

steps of the FIRE algorithm rather than relying on the current

product v*f that is positive if the FIRE dynamics moves downhill.

This can detect numerical issues where at large time steps the step

is uphill in energy even though locally v*f is positive, i.e. the

algorithm jumps over a valley because of a too large time step.

position_reset_callback: function(atoms, r, e, e_last)

Function that takes current *atoms* object, an array of position

*r* that the optimizer will revert to, current energy *e* and

energy of last step *e_last*. This is only called if e > e_last.

kwargs : dict, optional

Extra arguments passed to

:class:`~ase.optimize.optimize.Optimizer`.

.. deprecated:: 3.19.3

Use of ``maxmove`` is deprecated; please use ``maxstep``.

"""

Optimizer.__init__(self, atoms, restart, logfile, trajectory, **kwargs)

self.dt = dt

self.Nsteps = 0

if maxstep is not None:

self.maxstep = maxstep

else:

self.maxstep = self.defaults["maxstep"]

self.dtmax = dtmax

self.Nmin = Nmin

self.finc = finc

self.fdec = fdec

self.astart = astart

self.fa = fa

self.a = a

self.downhill_check = downhill_check

self.position_reset_callback = position_reset_callback

def initialize(self):

self.v = None

def read(self):

self.v, self.dt = self.load()

def step(self, f=None):

optimizable = self.optimizable

if f is None:

f = optimizable.get_forces()

if self.v is None:

self.v = np.zeros((len(optimizable), 3))

if self.downhill_check:

self.e_last = optimizable.get_potential_energy()

self.r_last = optimizable.get_positions().copy()

self.v_last = self.v.copy()

else:

is_uphill = False

if self.downhill_check:

e = optimizable.get_potential_energy()

# Check if the energy actually decreased

if e > self.e_last:

# If not, reset to old positions...

if self.position_reset_callback is not None:

self.position_reset_callback(

optimizable, self.r_last, e,

self.e_last)

optimizable.set_positions(self.r_last)

is_uphill = True

self.e_last = optimizable.get_potential_energy()

self.r_last = optimizable.get_positions().copy()

self.v_last = self.v.copy()

vf = np.vdot(f, self.v)

if vf > 0.0 and not is_uphill:

self.v = (1.0 - self.a) * self.v + self.a * f / np.sqrt(

np.vdot(f, f)) * np.sqrt(np.vdot(self.v, self.v))

if self.Nsteps > self.Nmin:

self.dt = min(self.dt * self.finc, self.dtmax)

self.a *= self.fa

self.Nsteps += 1

else:

self.v[:] *= 0.0

self.a = self.astart

self.dt *= self.fdec

self.Nsteps = 0

self.v += self.dt * f

dr = self.dt * self.v

normdr = np.sqrt(np.vdot(dr, dr))

if normdr > self.maxstep:

dr = self.maxstep * dr / normdr

r = optimizable.get_positions()

optimizable.set_positions(r + dr)

self.dump((self.v, self.dt))

File: ~/.py311/lib/python3.11/site-packages/ase/optimize/fire.py

Type: type

Subclasses:

[ ]: